In this article we will see how to automate back from our local machine to AWS S3 with sync command.

Requirements:

AWS Cli should be configured on Local Linux Machine

Internet Connectivity [Of course]

In our previous posts we saw how to install and configure AWS Cli on local machine. If you are don’t know how to do it please check my previous posts.

Steps to Automate Backup:

- Create S3 Bucket where we would be sending our data for backup

- Using AWS Cli to issue the command to backup

- Using Cron to schedule our backup

So let’s start:

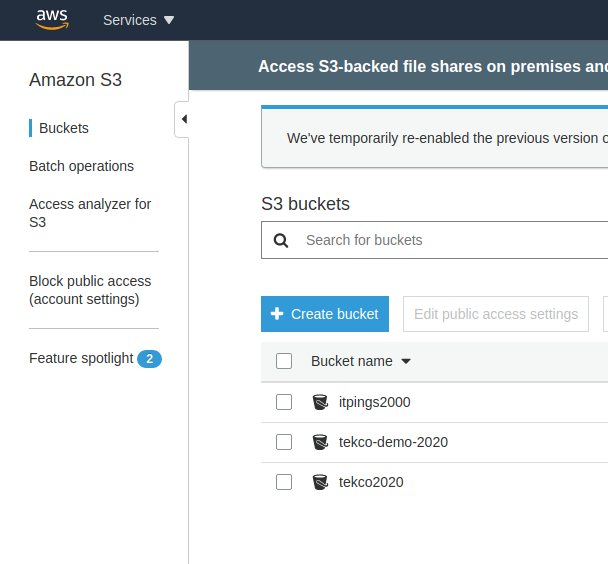

If you remember from our last post we already created S3 bucket by the name of tekco2020 as you can see in below image, we can use it to backup our data.

Folder or directory on our local computer which we would like to backup is /opt/tekco-backup

root@Red-Dragon:/opt/tekco-backup# pwd /opt/tekco-backup root@Red-Dragon:/opt/tekco-backup# ls fb-https.txt itpings-curl.txt password-curl-header.txt tekco.net-https-info.txt itpings-curl-header.txt password-2-curl-header.txt tekco.net-https-info2.txt

As we can see above that there is data available in our folder tekco-backup

Now issue the below command to start backing up data to AWS S3 bucket

root@Red-Dragon:/opt/tekco-backup# aws s3 sync . s3://tekco2020 upload: ./itpings-curl.txt to s3://tekco2020/itpings-curl.txt upload: ./itpings-curl-header.txt to s3://tekco2020/itpings-curl-header.txt upload: ./password-curl-header.txt to s3://tekco2020/password-curl-header.txt upload: ./password-2-curl-header.txt to s3://tekco2020/password-2-curl-header.txt upload: ./tekco.net-https-info2.txt to s3://tekco2020/tekco.net-https-info2.txt upload: ./tekco.net-https-info.txt to s3://tekco2020/tekco.net-https-info.txt upload: ./fb-https.txt to s3://tekco2020/fb-https.txt root@Red-Dragon:/opt/tekco-backup#

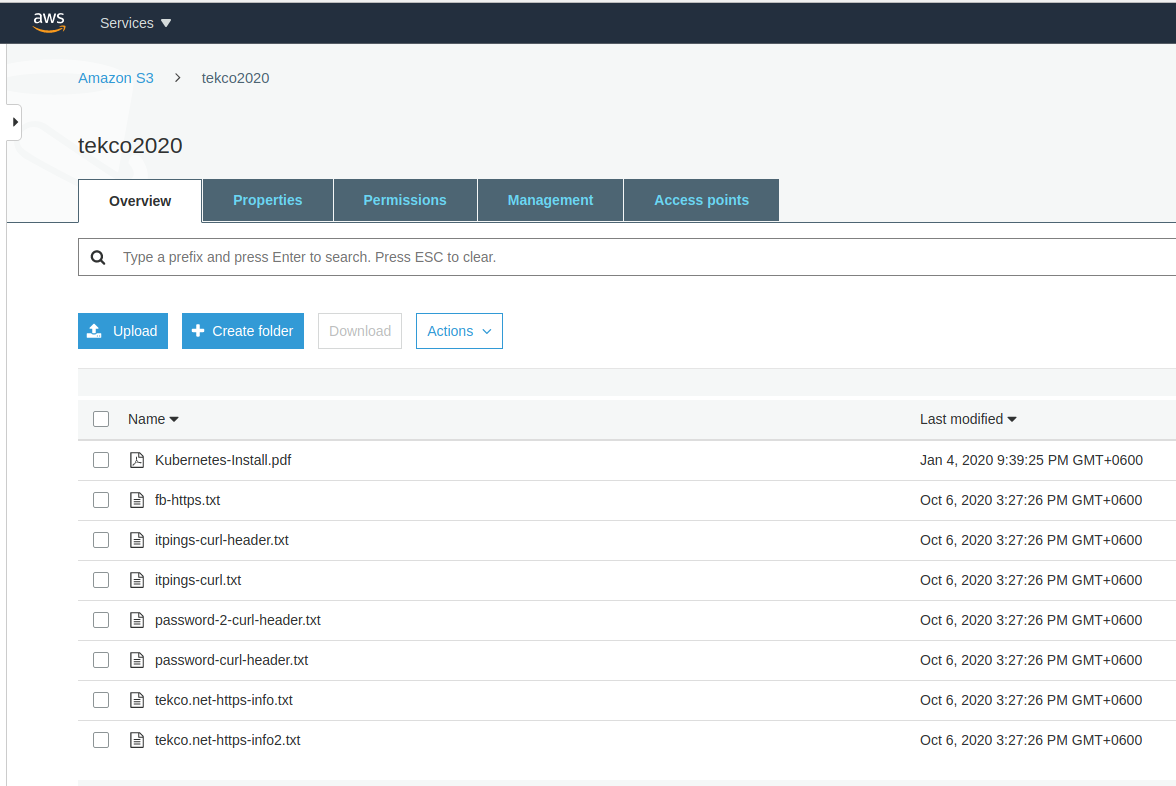

We can see that the files were successfully uploaded , let’s confirm it from AWS S3 Console

Great, we can see that our data is in the bucket. Now let’s create a file and rerun the same commad.

root@Red-Dragon:/opt/tekco-backup# touch sal.txt root@Red-Dragon:/opt/tekco-backup# aws s3 sync . s3://tekco2020 upload: ./sal.txt to s3://tekco2020/sal.txt

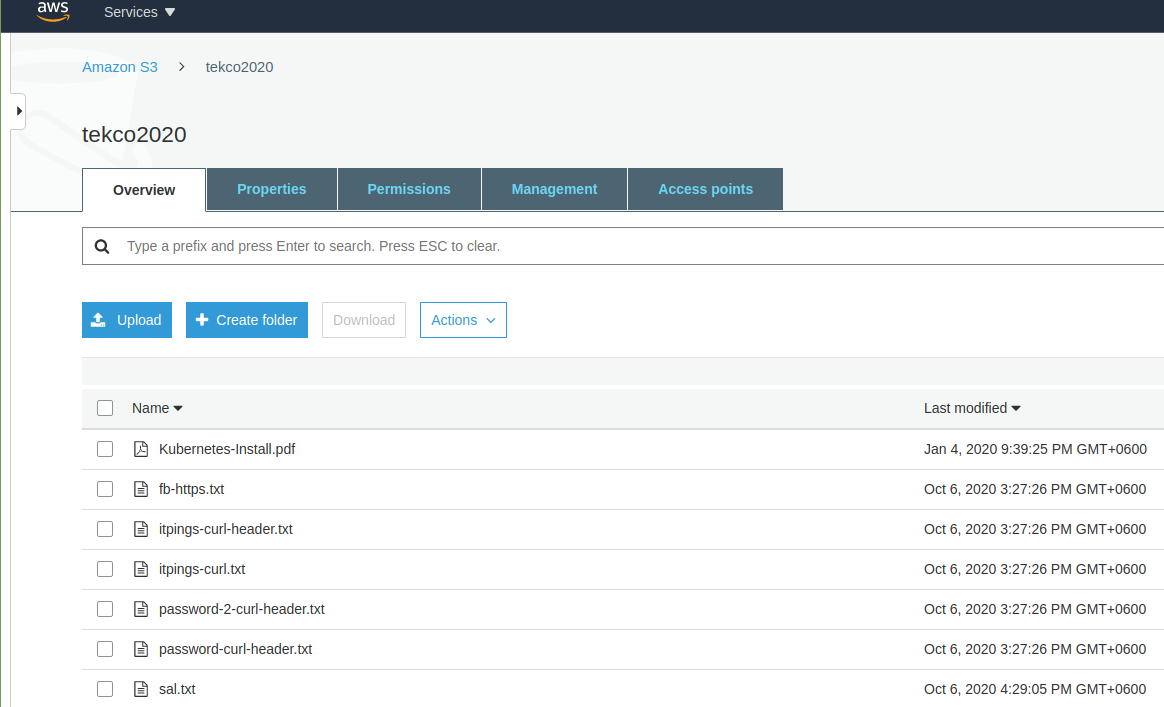

As we can see , this time only new file got copied to S3 bucket , lets confirm it from AWS S3 console.

Great ! Now to Automate the backup task we will setup cron. For this demo i will set it up to backup every 1 min. So let’s do it.

root@Red-Dragon:/opt/tekco-backup# crontab -e

Add the following lines to cron

*/1 * * * * /usr/local/bin/aws s3 sync /opt/tekco-backup/ s3://tekco2020

Save and quit and list with the following command

root@Red-Dragon:/opt/tekco-backup# crontab -l m h dom mon dow command */1 * * * * /usr/local/bin/aws s3 sync /opt/tekco-backup/ s3://tekco2020

Now restart cron , copy some files to tekco-backup folder and wait for a min to see if the backup starts automatically.

root@Red-Dragon:/opt/tekco-backup# systemctl restart cron root@Red-Dragon:/opt/tekco-backup# systemctl status cron ● cron.service - Regular background program processing daemon Loaded: loaded (/lib/systemd/system/cron.service; enabled; vendor preset: enabled) Active: active (running) since Tue 2020-10-06 16:40:11 +06; 5s ago Docs: man:cron(8) Main PID: 18618 (cron) Tasks: 1 (limit: 18972) Memory: 520.0K CGroup: /system.slice/cron.service └─18618 /usr/sbin/cron -f Oct 06 16:40:11 Red-Dragon systemd[1]: Started Regular background program processing daemon. Oct 06 16:40:11 Red-Dragon cron[18618]: (CRON) INFO (pidfile fd = 3) Oct 06 16:40:11 Red-Dragon cron[18618]: (CRON) INFO (Skipping @reboot jobs -- not system startup)

root@Red-Dragon:/opt/tekco-backup# touch mynewfile-aftercron.txt

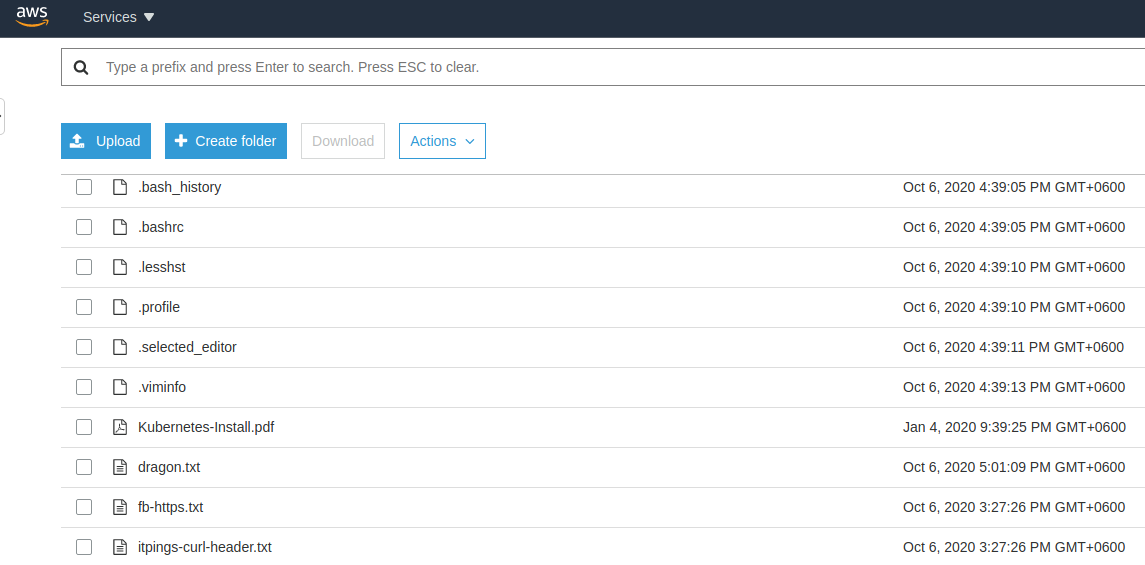

Now after 1 min , let’s check our bucket

root@Red-Dragon:/opt/tekco-backup# aws s3 ls s3://tekco2020 PRE .aptitude/ PRE .aws/ PRE .cache/ PRE .config/ PRE .dbus/ PRE .local/ PRE .ssh/ PRE .synaptic/ 2020-10-06 16:39:05 11147 .bash_history 2020-10-06 16:39:05 3106 .bashrc 2020-10-06 16:39:10 31 .lesshst 2020-10-06 16:39:10 161 .profile 2020-10-06 16:39:11 75 .selected_editor 2020-10-06 16:39:13 12103 .viminfo 2020-01-04 21:39:25 70522 Kubernetes-Install.pdf 2020-10-06 15:27:26 148119 fb-https.txt 2020-10-06 15:27:26 384 itpings-curl-header.txt 2020-10-06 15:27:26 53772 itpings-curl.txt 2020-10-06 16:59:06 0 mynewfile-aftercron.txt 2020-10-06 15:27:26 242 password-2-curl-header.txt 2020-10-06 15:27:26 187 password-curl-header.txt 2020-10-06 16:29:05 0 sal.txt 2020-10-06 15:27:26 71968 tekco.net-https-info.txt 2020-10-06 15:27:26 71968 tekco.net-https-info2.txt

Perfect, we can see our cron is working and file has been copied. Lets add one more file “dragon.txt” and check after 1 min from AWS S3 Console

root@Red-Dragon:/opt/tekco-backup# touch dragon.txt

Great our Automatic Backup is working every , minute , you can adjust it as per your requiremets.

Thanks,

Salman A. Francis

https://www.youtube.com/linuxking

https://www.tekco.net